HARD DECK

Hard Deck is a grounded 2D helicopter shooter built solo in Unity, structured around physical constraints, systemic combat, and narrative pressure. The project combines Metroidvania progression, Souls-like tension, and tactical top-down combat into a single, coherent design. Every system—movement, AI, damage, repairs, narrative delivery—follows one philosophy: the helicopter is a vulnerable machine in a hostile world, and survival has weight.

As the sole developer, I own the full pipeline: architecture, tools, gameplay systems, shaders, art direction, and narrative design. The project is built on a modular event-driven architecture, a unified pooling system, and a state-driven AI that shares the player’s flight model. Visuals follow the systems—pseudo-3D rigs, normal-mapped lighting, and lightweight shaders form a grounded aesthetic aligned with performance targets.

The narrative grows from mechanics rather than cutscenes: altitude, damage, repairs, scarcity, and environmental evidence form the core storytelling tools. Hard Deck’s structure, style, and tone emerge from the same technical foundations, resulting in a unified experience where every choice shapes the world and the aircraft that carries the player through it.

Hard Deck is a grounded 2D helicopter shooter built solo in Unity, structured around physical constraints, systemic combat, and narrative pressure. The project combines Metroidvania progression, Souls-like tension, and tactical top-down combat into a single, coherent design. Every system—movement, AI, damage, repairs, narrative delivery—follows one philosophy: the helicopter is a vulnerable machine in a hostile world, and survival has weight.

As the sole developer, I own the full pipeline: architecture, tools, gameplay systems, shaders, art direction, and narrative design. The project is built on a modular event-driven architecture, a unified pooling system, and a state-driven AI that shares the player’s flight model. Visuals follow the systems—pseudo-3D rigs, normal-mapped lighting, and lightweight shaders form a grounded aesthetic aligned with performance targets.

The narrative grows from mechanics rather than cutscenes: altitude, damage, repairs, scarcity, and environmental evidence form the core storytelling tools. Hard Deck’s structure, style, and tone emerge from the same technical foundations, resulting in a unified experience where every choice shapes the world and the aircraft that carries the player through it.

Event-Driven Core Architecture

At the foundation of Hard Deck is a hybrid communication system built around event-driven design. It combines persistent singleton managers, ScriptableObject-based events, and local UnityEvents operate in a unified framework, allowing AI, UI, VFX, pooling, and systems to communicate without hard dependencies.

Pooling System

Hard Deck relies on a constant flow of short-lived gameplay elements: missiles, tracers, smoke bursts, shockwaves, surface decals, dust interactions, and UI feedback. To support this density without unpredictable spikes, the Object Pooling System is treated as a core part of the game’s architecture rather than an optimization layer added late.

State-Driven AI

Enemy logic is built on a modular, state-driven pattern. Each behavior—movement, pathfinding, targeting, firing—runs as an independent component that can receive state-change events from the sensor system or from other components. This approach allows flexible, readable AI behaviors without the overhead of behavior trees or hard-coded transitions.

AI-Assisted Development

AI is an integral part of the production pipeline. Drawing on experience in team communication and project planning, I adapted the same principles to collaboration with AI—defining clear goals, prompting for structure, and iterating on solutions. This partnership closed the gap in software engineering, enabling me to adopt best practices far faster than traditional solo learning would allow.

Event-Driven Core Architecture

At the foundation of Hard Deck is a hybrid communication system built around event-driven design. It combines persistent singleton managers, ScriptableObject-based events, and local UnityEvents operate in a unified framework, allowing AI, UI, VFX, pooling, and systems to communicate without hard dependencies.

Pooling System

Hard Deck relies on a constant flow of short-lived gameplay elements: missiles, tracers, smoke bursts, shockwaves, surface decals, dust interactions, and UI feedback. To support this density without unpredictable spikes, the Object Pooling System is treated as a core part of the game’s architecture rather than an optimization layer added late.

State-Driven AI

Enemy logic is built on a modular, state-driven pattern. Each behavior—movement, pathfinding, targeting, firing—runs as an independent component that can receive state-change events from the sensor system or from other components. This approach allows flexible, readable AI behaviors without the overhead of behavior trees or hard-coded transitions.

AI-Assisted Development

AI is an integral part of the production pipeline. Drawing on experience in team communication and project planning, I adapted the same principles to collaboration with AI—defining clear goals, prompting for structure, and iterating on solutions. This partnership closed the gap in software engineering, enabling me to adopt best practices far faster than traditional solo learning would allow.

Event-Driven Core Architecture

At the foundation of Hard Deck is a hybrid communication system built around event-driven design. It combines persistent singleton managers, ScriptableObject-based events, and local UnityEvents operate in a unified framework, allowing AI, UI, VFX, pooling, and systems to communicate without hard dependencies.

Pooling System

Hard Deck relies on a constant flow of short-lived gameplay elements: missiles, tracers, smoke bursts, shockwaves, surface decals, dust interactions, and UI feedback. To support this density without unpredictable spikes, the Object Pooling System is treated as a core part of the game’s architecture rather than an optimization layer added late.

State-Driven AI

Enemy logic is built on a modular, state-driven pattern. Each behavior—movement, pathfinding, targeting, firing—runs as an independent component that can receive state-change events from the sensor system or from other components. This approach allows flexible, readable AI behaviors without the overhead of behavior trees or hard-coded transitions.

AI-Assisted Development

AI is an integral part of the production pipeline. Drawing on experience in team communication and project planning, I adapted the same principles to collaboration with AI—defining clear goals, prompting for structure, and iterating on solutions. This partnership closed the gap in software engineering, enabling me to adopt best practices far faster than traditional solo learning would allow.

The system started as a solution to Unity’s limitation with direct event links and scene-based object references. Instead of hardcoding dependencies, I created three coordinated layers:

1. Persistent Singletons

Core managers live in a dedicated additive scene, remaining active throughout the game. This ensures consistent communication and stable data flow.

Examples:

Object Pool Manager: Handles reusable objects for weapons and effects. A rocket pulling smoke, sound, flash, and shockwave from one shared pool forms complex sequences without allocations.

UI Data Manager: Stores shared values (ammo, heat, health). Weapons update values here; multiple UI elements subscribe and react immediately.

This layer enables dynamic querying and clean scene transitions.

2. ScriptableObject Events (Message Bus)

SO events allow decoupled broadcasting across the project. Events fire regardless of whether listeners are currently active.

Examples:

Weapon Switcher: Raises a “WeaponSwitched” event. Listeners include the weapon UI (updates graphics and mode display), cursor manager (changes cursor), and inactive weapons (reset states).

Camera Shake: Explosions or impacts raise a “Shake” event with intensity. The effect manager accumulates and blends multiple events with upper intensity limits.

This creates scalable interactions without rigid references.

3. Local UnityEvents

Local UnityEvents handle logic internal to a prefab—keeping its behavior self-contained, observable, and easy to debug.

Examples:

The Sensor System detects player projectiles or homing missiles. It raises a local event that switches the Pathfinding module into avoidance mode. The Fire Control module simultaneously triggers a flare launch event.

NPC's destruction runs a series of UnityEvents that manages changes and reactions inside the prefab—rise events, triggers VFX (explosion and smoke), switches sprites, etc.

Each prefab stays autonomous but participates in the global system cleanly.

The system started as a solution to Unity’s limitation with direct event links and scene-based object references. Instead of hardcoding dependencies, I created three coordinated layers:

1. Persistent Singletons

Core managers live in a dedicated additive scene, remaining active throughout the game. This ensures consistent communication and stable data flow.

Examples:

Object Pool Manager: Handles reusable objects for weapons and effects. A rocket pulling smoke, sound, flash, and shockwave from one shared pool forms complex sequences without allocations.

UI Data Manager: Stores shared values (ammo, heat, health). Weapons update values here; multiple UI elements subscribe and react immediately.

This layer enables dynamic querying and clean scene transitions.

2. ScriptableObject Events (Message Bus)

SO events allow decoupled broadcasting across the project. Events fire regardless of whether listeners are currently active.

Examples:

Weapon Switcher: Raises a “WeaponSwitched” event. Listeners include the weapon UI (updates graphics and mode display), cursor manager (changes cursor), and inactive weapons (reset states).

Camera Shake: Explosions or impacts raise a “Shake” event with intensity. The effect manager accumulates and blends multiple events with upper intensity limits.

This creates scalable interactions without rigid references.

3. Local UnityEvents

Local UnityEvents handle logic internal to a prefab—keeping its behavior self-contained, observable, and easy to debug.

Examples:

The Sensor System detects player projectiles or homing missiles. It raises a local event that switches the Pathfinding module into avoidance mode. The Fire Control module simultaneously triggers a flare launch event.

NPC's destruction runs a series of UnityEvents that manages changes and reactions inside the prefab—rise events, triggers VFX (explosion and smoke), switches sprites, etc.

Each prefab stays autonomous but participates in the global system cleanly.

The combat in Hard Deck relies on dense, overlapping events: missiles in the air, muzzle flashes, impact sparks, smoke trails, secondary explosions, surface-aware decals, and dozens of small gameplay cues firing in parallel. To handle that load predictably, I treated object pooling as a first-class architectural subsystem rather than an optimization pass. Every dynamic element in the game—from weapons to environment interactions—runs through a unified lifecycle controlled by the pooling system.

The motivation is straightforward: creating and destroying objects at runtime is expensive, unpredictable, and directly at odds with the game’s pacing. Hard Deck benefits from stable frame times more than raw framerate. Pooling adds that stability early in development by turning objects into reusable resources with deterministic behavior, which keeps large bursts of activity from causing allocation spikes.

Persistent Manager in Additive Scene

The pooling system runs from a dedicated additive scene that stays active for the entire session. This keeps all pools initialized and accessible regardless of where the player is in the world or how scenes change around them. Weapons, VFX, decals, and AI helpers can request objects immediately at any time, without waiting for loading phases or domain initialization.

This mirrors how other foundational systems (input, event channels, data managers) operate, as they are always present.

Configurable Pools With Async Loading & Unloading

Pools are organized into pool groups tied to mission phases, biomes, or level segments. When the environment changes, the system can selectively load the required pools and unload the ones no longer needed.

Pools are created asynchronously so that scene transitions don’t result in heavy initialization spikes. Combined with the persistent additive scene, this ensures that loading new areas remains smooth while the active pools remain fully operational for ongoing gameplay.

Unified Object Lifecycle

Every pooled object follows the same lifecycle: initialized once, reused many times, and reset through lightweight callbacks. This removes the overhead of constant instancing and destruction, but more importantly, gives the game predictable behavior during high-intensity moments.

Because all gameplay-critical systems use the same interface, the pooling layer becomes a shared language across weapons, VFX, AI utilities, and decals.

Nested & Cascade Spawning

Many of Hard Deck’s effects are compositions of several smaller elements with different lifetimes. A missile strike may produce a flash, dust burst, debris, smoke trail, shockwave, and light pulse — all triggered in sequence and all cleaned up independently.

Instead of large monolithic prefabs containing everything, each sub-effect is its own pooled entity. This makes effects easier to iterate on, reduces memory usage, and naturally supports chaining or reusing the same sub-effects elsewhere in the game.

The combat in Hard Deck relies on dense, overlapping events: missiles in the air, muzzle flashes, impact sparks, smoke trails, secondary explosions, surface-aware decals, and dozens of small gameplay cues firing in parallel. To handle that load predictably, I treated object pooling as a first-class architectural subsystem rather than an optimization pass. Every dynamic element in the game—from weapons to environment interactions—runs through a unified lifecycle controlled by the pooling system.

The motivation is straightforward: creating and destroying objects at runtime is expensive, unpredictable, and directly at odds with the game’s pacing. Hard Deck benefits from stable frame times more than raw framerate. Pooling adds that stability early in development by turning objects into reusable resources with deterministic behavior, which keeps large bursts of activity from causing allocation spikes.

Persistent Manager in Additive Scene

The pooling system runs from a dedicated additive scene that stays active for the entire session. This keeps all pools initialized and accessible regardless of where the player is in the world or how scenes change around them. Weapons, VFX, decals, and AI helpers can request objects immediately at any time, without waiting for loading phases or domain initialization.

This mirrors how other foundational systems (input, event channels, data managers) operate, as they are always present.

Configurable Pools With Async Loading & Unloading

Pools are organized into pool groups tied to mission phases, biomes, or level segments. When the environment changes, the system can selectively load the required pools and unload the ones no longer needed.

Pools are created asynchronously so that scene transitions don’t result in heavy initialization spikes. Combined with the persistent additive scene, this ensures that loading new areas remains smooth while the active pools remain fully operational for ongoing gameplay.

Unified Object Lifecycle

Every pooled object follows the same lifecycle: initialized once, reused many times, and reset through lightweight callbacks. This removes the overhead of constant instancing and destruction, but more importantly, gives the game predictable behavior during high-intensity moments.

Because all gameplay-critical systems use the same interface, the pooling layer becomes a shared language across weapons, VFX, AI utilities, and decals.

Nested & Cascade Spawning

Many of Hard Deck’s effects are compositions of several smaller elements with different lifetimes. A missile strike may produce a flash, dust burst, debris, smoke trail, shockwave, and light pulse — all triggered in sequence and all cleaned up independently.

Instead of large monolithic prefabs containing everything, each sub-effect is its own pooled entity. This makes effects easier to iterate on, reduces memory usage, and naturally supports chaining or reusing the same sub-effects elsewhere in the game.

Hard Deck’s enemy logic is built on a state-driven framework shaped around the helicopter’s movement model. The goal was to construct opponents that behave with mechanical consistency under the same physical constraints as the player. The system is designed to respond to pressure—visibility, projectiles, obstacles, and distance—through predictable transitions rather than pre-scripted patterns. This produces encounters that feel systemic, where enemies corner, reposition, evade, and commit based on situational logic.

The AI layer sits on top of the same Rigidbody2D-based movement model the player uses. Instead of teleporting rotations or snapping between directions, every bot must physically accelerate, decelerate, and turn through weight and inertia. The state machine exists to coordinate this motion. Each state defines where the bot wants to move and aim, while the BotController enforces the actual physics. This keeps the system unified: there is no “AI movement” and “player movement”—only different inputs into the same flight model.

Integration With Detection Systems

State transitions are triggered by contextual signals: (FoV, projectile detection, proximity thresholds, etc). These detections raise UnityEvents or ScriptableObjectEvents, which the state machine listens for. This keeps transitions decoupled from perception logic and makes each module replaceable.

Inspector-Driven Control

Every state parameter can be adjusted in the Inspector: waypoints, safe zones, target prioritys, cooldown durations, etc. This supports the common workflow for this project: quick iteration, predictable debug behavior, and clear separation between movement, decision-making, and environmental layout.

Dynamic Intent Assignment

Each AI module writes its output into shared intent. Pathfinding sets movement, Targeting sets orientation, the Attack module issues fire and weapon-mode, and a Countermeasure module handles dodge, flare, and chaff triggers. The controllers interpret these intents through physics and cooldown rules. This structure lets the enemy adapt to changing conditions without breaking the underlying logic.

Outcomes & System Behavior

The result is an AI layer that feels grounded in the game’s physical logic rather than abstract decision trees. Bots inherit the same constraints as the player, so their behavior emerges from mass, thrust, and rotational inertia. Patrol routes feel routine; chases feel deliberate; evasive maneuvers feel desperate rather than scripted. Because the state machine only manipulates intent, not motion, the entire behavior spectrum remains compatible with future states, expanded detection, and narrative-driven encounters.

Hard Deck’s enemy logic is built on a state-driven framework shaped around the helicopter’s movement model. The goal was to construct opponents that behave with mechanical consistency under the same physical constraints as the player. The system is designed to respond to pressure—visibility, projectiles, obstacles, and distance—through predictable transitions rather than pre-scripted patterns. This produces encounters that feel systemic, where enemies corner, reposition, evade, and commit based on situational logic.

The AI layer sits on top of the same Rigidbody2D-based movement model the player uses. Instead of teleporting rotations or snapping between directions, every bot must physically accelerate, decelerate, and turn through weight and inertia. The state machine exists to coordinate this motion. Each state defines where the bot wants to move and aim, while the BotController enforces the actual physics. This keeps the system unified: there is no “AI movement” and “player movement”—only different inputs into the same flight model.

Integration With Detection Systems

State transitions are triggered by contextual signals: (FoV, projectile detection, proximity thresholds, etc). These detections raise UnityEvents or ScriptableObjectEvents, which the state machine listens for. This keeps transitions decoupled from perception logic and makes each module replaceable.

Inspector-Driven Control

Every state parameter can be adjusted in the Inspector: waypoints, safe zones, target prioritys, cooldown durations, etc. This supports the common workflow for this project: quick iteration, predictable debug behavior, and clear separation between movement, decision-making, and environmental layout.

Dynamic Intent Assignment

Each AI module writes its output into shared intent. Pathfinding sets movement, Targeting sets orientation, the Attack module issues fire and weapon-mode, and a Countermeasure module handles dodge, flare, and chaff triggers. The controllers interpret these intents through physics and cooldown rules. This structure lets the enemy adapt to changing conditions without breaking the underlying logic.

Outcomes & System Behavior

The result is an AI layer that feels grounded in the game’s physical logic rather than abstract decision trees. Bots inherit the same constraints as the player, so their behavior emerges from mass, thrust, and rotational inertia. Patrol routes feel routine; chases feel deliberate; evasive maneuvers feel desperate rather than scripted. Because the state machine only manipulates intent, not motion, the entire behavior spectrum remains compatible with future states, expanded detection, and narrative-driven encounters.

Hard Deck is a large-scale production for a solo developer, and the scope required a level of iteration that is difficult to achieve through conventional means. At the start, my expectations were modest. I intended to rely on AI for small, localized tasks—mainly Unity Event integrations and basic scripting support. My previous work had already involved building complex behaviors from cascades of simple dependencies, so the assumption was that this would be enough to assemble a few lightweight projects.

The collaboration took a different direction. AI became both mentor and technical partner. Working through code in an applied, production-driven context accelerated my development pace far beyond what structured courses or isolated research could provide. Instead of trying to grow into a generalist programmer capable of manually implementing every subsystem, I shifted the emphasis toward architecture, scalability, and system boundaries. The AI handled mechanical implementation under explicit constraints, while I maintained full ownership over the design, logic, and intent.

This workflow matched established teamwork habbits: clear communication, precise briefs, defined goals, and iterative feedback loops. The same production practices—expectations, constraints, definition of done—formed the basis of interaction with AI. Through repeated cycles of testing and refinement, the process matured into a reliable development pattern suited for building Hard Deck’s interconnected systems without losing direction or control.

Closing the Coding Gap

AI covered the coding side of implementation under defined constraints, allowing me to operate at a scale traditionally reserved for entire teams. It absorbed routine coding tasks while I focused on system logic, making advanced features within a solo workflow.

Accelerated Learning Through Production

Working with AI in a live development environment replaced slow theoretical study with immediate, applied problem-solving. Each iteration exposed new patterns, reinforced best practices, and clarified architectural decisions, creating a learning curve that progressed in real time with the project itself.

Architectural and Creative Ownership

The systems, boundaries, and design intent remained fully under my control. AI produced drafts, constraints, logic flow, and final implementation always followed the structure I defined, ensuring the game’s direction stayed consistent with its tone, mechanics, and long-term scalability.

A Natural Extension of Existing Workflow

The collaboration mirrored how I already work with teams: clear briefs, precise expectations, structured feedback loops, and iterative refinement. This made AI an intuitive addition to the pipeline—another tool that supports momentum without altering the development philosophy.

Hard Deck is a large-scale production for a solo developer, and the scope required a level of iteration that is difficult to achieve through conventional means. At the start, my expectations were modest. I intended to rely on AI for small, localized tasks—mainly Unity Event integrations and basic scripting support. My previous work had already involved building complex behaviors from cascades of simple dependencies, so the assumption was that this would be enough to assemble a few lightweight projects.

The collaboration took a different direction. AI became both mentor and technical partner. Working through code in an applied, production-driven context accelerated my development pace far beyond what structured courses or isolated research could provide. Instead of trying to grow into a generalist programmer capable of manually implementing every subsystem, I shifted the emphasis toward architecture, scalability, and system boundaries. The AI handled mechanical implementation under explicit constraints, while I maintained full ownership over the design, logic, and intent.

This workflow matched established teamwork habbits: clear communication, precise briefs, defined goals, and iterative feedback loops. The same production practices—expectations, constraints, definition of done—formed the basis of interaction with AI. Through repeated cycles of testing and refinement, the process matured into a reliable development pattern suited for building Hard Deck’s interconnected systems without losing direction or control.

Closing the Coding Gap

AI covered the coding side of implementation under defined constraints, allowing me to operate at a scale traditionally reserved for entire teams. It absorbed routine coding tasks while I focused on system logic, making advanced features within a solo workflow.

Accelerated Learning Through Production

Working with AI in a live development environment replaced slow theoretical study with immediate, applied problem-solving. Each iteration exposed new patterns, reinforced best practices, and clarified architectural decisions, creating a learning curve that progressed in real time with the project itself.

Architectural and Creative Ownership

The systems, boundaries, and design intent remained fully under my control. AI produced drafts, constraints, logic flow, and final implementation always followed the structure I defined, ensuring the game’s direction stayed consistent with its tone, mechanics, and long-term scalability.

A Natural Extension of Existing Workflow

The collaboration mirrored how I already work with teams: clear briefs, precise expectations, structured feedback loops, and iterative refinement. This made AI an intuitive addition to the pipeline—another tool that supports momentum without altering the development philosophy.

Movement Model & Physicality

Hard Deck’s flight model treats the helicopter as a machine with inertia, delayed authority, and predictable weight. Every encounter is shaped by physical constraints rather than arcade responsiveness, making movement itself a tactical resource.

Weapons, Tactics & System-Grounded Combat

Weapons follow operational logic—angles, timing, and exposure determine success more than raw accuracy. Combat relies on reading geometry and managing risk windows instead of relying on scripted patterns or arbitrary cooldowns.

AI Intent & Combat Behavior

Enemies act within the same spatial and physical limits as the player, producing behavior driven by perception and tactical reasoning rather than pre-set loops. Difficulty emerges from coherent decisions, not artificial stat boosts.

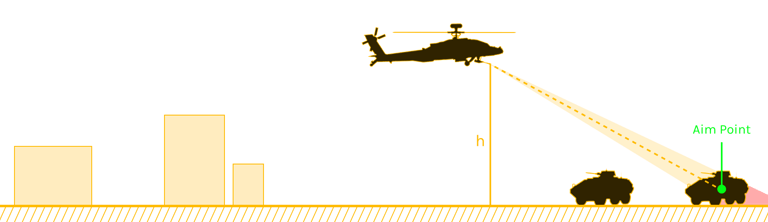

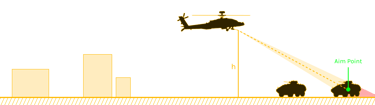

Spatial Combat Geometry

The height system gives the 2D space volumetric logic, turning terrain into active cover and creating meaningful altitude-driven decisions. Visibility, firing angles, and movement paths follow consistent vertical rules that shape every engagement.

Movement Model & Physicality

Hard Deck’s flight model treats the helicopter as a machine with inertia, delayed authority, and predictable weight. Every encounter is shaped by physical constraints rather than arcade responsiveness, making movement itself a tactical resource.

Weapons, Tactics & System-Grounded Combat

Weapons follow operational logic—angles, timing, and exposure determine success more than raw accuracy. Combat relies on reading geometry and managing risk windows instead of relying on scripted patterns or arbitrary cooldowns.

AI Intent & Combat Behavior

Enemies act within the same spatial and physical limits as the player, producing behavior driven by perception and tactical reasoning rather than pre-set loops. Difficulty emerges from coherent decisions, not artificial stat boosts.

Spatial Combat Geometry

The height system gives the 2D space volumetric logic, turning terrain into active cover and creating meaningful altitude-driven decisions. Visibility, firing angles, and movement paths follow consistent vertical rules that shape every engagement.

Hard Deck’s movement model is built around a helicopter that behaves like a machine with mass, inertia, and delayed authority transfer. The aircraft transitions through acceleration curves and rotational drag that must be anticipated and managed. The player’s challenge is negotiating with the airframe’s physical constraints in real time. This creates a tactical layer where misalignment is a consequence of misreading momentum—not an artificial difficulty spike.

Inertia-Driven Navigation

The helicopter accelerates and rotates through curves that require planned inputs instead of immediate corrections, making spatial control a deliberate and tactical process.

This approach shifts the experience away from arcade maneuvering and toward controlled instability. Combat pressure becomes inseparable from flight mechanics, because every action carries the cost of the aircraft’s reaction time. Precision is earned through pattern recognition and predictive thinking rather than reflex alone. This keeps the helicopter at the center of the game’s logic, anchoring challenge and mastery in consistent physical behavior.

Continuous Motion Commitments

Once the aircraft commits to a heading or strafe, correcting that path takes time, turning positioning into a meaningful decision rather than an instant adjustment.

Exposure Created by Movement

Poor alignment or overcommitment opens the aircraft to enemy fire, allowing the environment and aircraft physics—not arbitrary values—to define risk moments.

Tension Emerging from Self-Management

The player must balance flying, aiming, and situational awareness with limited input bandwidth, reinforcing the sense of workload typical of real aircrews.

Hard Deck’s movement model is built around a helicopter that behaves like a machine with mass, inertia, and delayed authority transfer. The aircraft transitions through acceleration curves and rotational drag that must be anticipated and managed. The player’s challenge is negotiating with the airframe’s physical constraints in real time. This creates a tactical layer where misalignment is a consequence of misreading momentum—not an artificial difficulty spike.

Inertia-Driven Navigation

The helicopter accelerates and rotates through curves that require planned inputs instead of immediate corrections, making spatial control a deliberate and tactical process.

This approach shifts the experience away from arcade maneuvering and toward controlled instability. Combat pressure becomes inseparable from flight mechanics, because every action carries the cost of the aircraft’s reaction time. Precision is earned through pattern recognition and predictive thinking rather than reflex alone. This keeps the helicopter at the center of the game’s logic, anchoring challenge and mastery in consistent physical behavior.

Continuous Motion Commitments

Once the aircraft commits to a heading or strafe, correcting that path takes time, turning positioning into a meaningful decision rather than an instant adjustment.

Exposure Created by Movement

Poor alignment or overcommitment opens the aircraft to enemy fire, allowing the environment and aircraft physics—not arbitrary values—to define risk moments.

Tension Emerging from Self-Management

The player must balance flying, aiming, and situational awareness with limited input bandwidth, reinforcing the sense of workload typical of real aircrews.

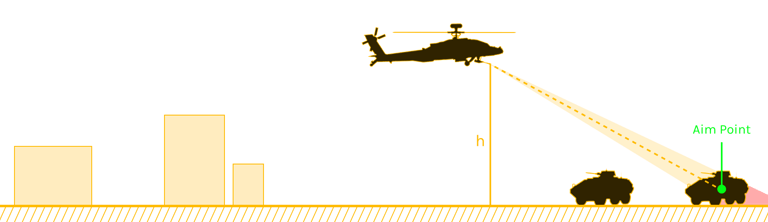

The height system transforms a flat 2D top-down perspective into a layered space where altitude, cover, and line-of-sight shape every engagement. Buildings, vegetation, and terrain possess vertical mass that affects movement and firing solutions. The player must constantly evaluate whether an approach path is masked, partially exposed, or wide open, turning navigation into a spatial puzzle that blends 2D pathing with 3D occlusion.

Projectile Interaction Based on Relative Altitude

Shots clear or collide depending on how their firing altitude compares to the object’s height, enabling intuitive interactions like shooting over sandbags but not over tall buildings.

This vertical logic reinforces the game’s grounded tone by making the environment an active player in combat. Low passes hide the helicopter but restrict targeting; high approaches improve visibility but increase vulnerability. The world pushes back through its geometry, requiring players to solve tactical problems through altitude management and environmental reading rather than pure mechanical skill.

Consistent Height Values for All Objects

Every sprite—helicopter, buildings, vegetation, projectiles—uses a height category to determine obstruction, masking, and collision outcomes in a clean pseudo-3D ruleset.

Tactical Occlusion & Exposure Derived From Height

Line-of-sight and masking follow vertical relationships, allowing AI and player to leverage cover, break LOS, or create firing angles using altitude instead of purely horizontal movement.

Navigation Through Vertical Logic

The helicopter passes over low objects but must route around tall ones, turning environmental art into functional terrain with predictable navigational rules.

The height system transforms a flat 2D top-down perspective into a layered space where altitude, cover, and line-of-sight shape every engagement. Buildings, vegetation, and terrain possess vertical mass that affects movement and firing solutions. The player must constantly evaluate whether an approach path is masked, partially exposed, or wide open, turning navigation into a spatial puzzle that blends 2D pathing with 3D occlusion.

Projectile Interaction Based on Relative Altitude

Shots clear or collide depending on how their firing altitude compares to the object’s height, enabling intuitive interactions like shooting over sandbags but not over tall buildings.

This vertical logic reinforces the game’s grounded tone by making the environment an active player in combat. Low passes hide the helicopter but restrict targeting; high approaches improve visibility but increase vulnerability. The world pushes back through its geometry, requiring players to solve tactical problems through altitude management and environmental reading rather than pure mechanical skill.

Consistent Height Values for All Objects

Every sprite—helicopter, buildings, vegetation, projectiles—uses a height category to determine obstruction, masking, and collision outcomes in a clean pseudo-3D ruleset.

Tactical Occlusion & Exposure Derived From Height

Line-of-sight and masking follow vertical relationships, allowing AI and player to leverage cover, break LOS, or create firing angles using altitude instead of purely horizontal movement.

Navigation Through Vertical Logic

The helicopter passes over low objects but must route around tall ones, turning environmental art into functional terrain with predictable navigational rules.

Combat in Hard Deck follows the operational vocabulary of real attack helicopters rather than the patterns of arcade shooters. Weapons aren’t treated as instantaneous actions but as systems with timing, exposure, and line-of-sight requirements. Laser-guided rockets reward precise approach angles and timing; missiles create engagement windows where both sides can influence the outcome. These layers transform shooting from a reaction test into a tactical exchange that depends on positioning and situational control.

TADS as Precision with Constraints

The targeting camera offers superior accuracy but restricts awareness and mobility, reinforcing the trade-off between surgical strikes and battlefield exposure.

The intent is not authenticity for its own sake, but to create combat pressure that feels earned through decision quality. The helicopter must commit to firing envelopes, target tracking, and safe breakaway angles, and the player must evaluate when risk is justified. This creates a steady loop of assess, commit, adjust, rather than fire until targets disappear. Combat becomes a negotiation between the aircraft’s capabilities and the player’s ability to exploit them under stress.

Weapons as Operational Systems

Laser guidance, line-of-sight demands, and post-launch tracking force the player to expose the aircraft during firing sequences, turning each shot into a decision with positional consequences.

Tactical Patterns Emerging Naturally

Pop-up attacks, standoff engagements, and delayed guidance tactics develop from system interactions rather than scripted instructions, giving players freedom to adopt authentic strategies.

Enemy Counterplay Anchored in Real Logic

Flares, smoke, and evasive maneuvers operate on timing windows rather than randomness, allowing enemies to resist player actions in a consistent and understandable manner.

Combat in Hard Deck follows the operational vocabulary of real attack helicopters rather than the patterns of arcade shooters. Weapons aren’t treated as instantaneous actions but as systems with timing, exposure, and line-of-sight requirements. Laser-guided rockets reward precise approach angles and timing; missiles create engagement windows where both sides can influence the outcome. These layers transform shooting from a reaction test into a tactical exchange that depends on positioning and situational control.

TADS as Precision with Constraints

The targeting camera offers superior accuracy but restricts awareness and mobility, reinforcing the trade-off between surgical strikes and battlefield exposure.

The intent is not authenticity for its own sake, but to create combat pressure that feels earned through decision quality. The helicopter must commit to firing envelopes, target tracking, and safe breakaway angles, and the player must evaluate when risk is justified. This creates a steady loop of assess, commit, adjust, rather than fire until targets disappear. Combat becomes a negotiation between the aircraft’s capabilities and the player’s ability to exploit them under stress.

Weapons as Operational Systems

Laser guidance, line-of-sight demands, and post-launch tracking force the player to expose the aircraft during firing sequences, turning each shot into a decision with positional consequences.

Tactical Patterns Emerging Naturally

Pop-up attacks, standoff engagements, and delayed guidance tactics develop from system interactions rather than scripted instructions, giving players freedom to adopt authentic strategies.

Enemy Counterplay Anchored in Real Logic

Flares, smoke, and evasive maneuvers operate on timing windows rather than randomness, allowing enemies to resist player actions in a consistent and understandable manner.

Enemy AI is built around behaviors that mirror the same physical limits and tactical considerations the player faces. Rather than relying on preset loops, enemies respond to changes in visibility, pressure, and terrain through readable and consistent actions. This creates opponents that behave within the same spatial logic as the player, making each encounter feel grounded rather than manufactured.

Behavioral Consistency Across Encounters

Bots follow the same logic in similar situations, enabling players to learn enemy tendencies and build expectations through repeated observation.

The design goal is to let the AI’s internal reasoning emerge visibly through movement, space management, and reaction timing. Bots corner, reposition, or retreat because their situational interpretation pushes them toward those decisions—not because the game dictates a pattern. This approach produces encounters where players learn to anticipate behavior from observed intent, reinforcing a sense of fairness and tactical coherence.

Perception-Driven Behavior

Enemy reactions originate from what they currently “know”—line of sight, threat cues, and distance—creating believable delays, mistakes, and misreads rather than perfect omniscience.

Pressure Built From Systemic Decisions

Difficulty emerges from the AI making tactically sound choices—covering angles, avoiding exposure, timing countermeasures—rather than from hidden accuracy or damage bonuses.

Intent Expressed Through Motion

Chasing, holding distance, breaking line-of-fire, or dodging are outcomes of internal evaluation, allowing players to read behavior from positioning instead of relying on UI indicators.

Enemy AI is built around behaviors that mirror the same physical limits and tactical considerations the player faces. Rather than relying on preset loops, enemies respond to changes in visibility, pressure, and terrain through readable and consistent actions. This creates opponents that behave within the same spatial logic as the player, making each encounter feel grounded rather than manufactured.

Behavioral Consistency Across Encounters

Bots follow the same logic in similar situations, enabling players to learn enemy tendencies and build expectations through repeated observation.

The design goal is to let the AI’s internal reasoning emerge visibly through movement, space management, and reaction timing. Bots corner, reposition, or retreat because their situational interpretation pushes them toward those decisions—not because the game dictates a pattern. This approach produces encounters where players learn to anticipate behavior from observed intent, reinforcing a sense of fairness and tactical coherence.

Perception-Driven Behavior

Enemy reactions originate from what they currently “know”—line of sight, threat cues, and distance—creating believable delays, mistakes, and misreads rather than perfect omniscience.

Pressure Built From Systemic Decisions

Difficulty emerges from the AI making tactically sound choices—covering angles, avoiding exposure, timing countermeasures—rather than from hidden accuracy or damage bonuses.

Intent Expressed Through Motion

Chasing, holding distance, breaking line-of-fire, or dodging are outcomes of internal evaluation, allowing players to read behavior from positioning instead of relying on UI indicators.

Hard Deck is still in active development, and several systems are being expanded to push the game beyond moment-to-moment piloting and into the consequences that follow every decision the player makes. These features contribute to emergent gameplay and act as extensions of the game’s core logic—shaping how the helicopter survives, fails, and recovers across longer stretches of play. The intent is to let each encounter leave a mark on the airframe, the route, and the player’s available options, turning survival into more than a series of isolated fights.

Field Repair, Landing Risk, and Recovery Planning

Repairs require landing in designated safe zones and restore modules one stage at a time while disabling movement and weapons. This creates intentional vulnerability windows and ties recovery to geography. Full repairs remain gated behind helipads, turning routing and positioning into strategic decisions rather than logistical chores.`

All upcoming mechanics follow the same design ideology that defines the rest of the project: every system must reinforce the tone, support the narrative, and place the player inside the world rather than above it. Damage, scarcity, repair, and degradation become part of the story the player lives through. The goal is straightforward: the helicopter is not a resettable tool but a companion the player must carry through the campaign—its condition, failures, and recovery forming the connective tissue between gameplay and narrative.

Modular Health & Cascading Damage

Each major system—hull, engines, avionics, weapons—has independent health and armor values. Destroyed modules redirect further damage into the hull, creating predictable cascading failures instead of randomness. This makes every hit consequential: losing a component alters flight, targeting, or stability until the player commits to a repair window.

Resource Pressure and Terrain-Linked Logistics

Repair kits, safe landing spots, and regional scarcity introduce operational limits that bind the aircraft’s condition to the structure of the map. Routes are shaped by threat density, recovery points, and consumable availability. Attrition becomes part of progression, and navigation becomes a planning problem layered on top of movement, combat, and AI behavior.

Degradation, Fire, and Persistent Pressure

Modules can degrade or ignite, applying continuous damage or performance penalties until addressed. These events introduce time pressure and force the player to manage evasion, suppression, and emergency stabilization simultaneously. Consequences persist beyond the fight, turning system failure into a long-term tactical consideration.

Hard Deck is still in active development, and several systems are being expanded to push the game beyond moment-to-moment piloting and into the consequences that follow every decision the player makes. These features contribute to emergent gameplay and act as extensions of the game’s core logic—shaping how the helicopter survives, fails, and recovers across longer stretches of play. The intent is to let each encounter leave a mark on the airframe, the route, and the player’s available options, turning survival into more than a series of isolated fights.

Field Repair, Landing Risk, and Recovery Planning

Repairs require landing in designated safe zones and restore modules one stage at a time while disabling movement and weapons. This creates intentional vulnerability windows and ties recovery to geography. Full repairs remain gated behind helipads, turning routing and positioning into strategic decisions rather than logistical chores.`

All upcoming mechanics follow the same design ideology that defines the rest of the project: every system must reinforce the tone, support the narrative, and place the player inside the world rather than above it. Damage, scarcity, repair, and degradation become part of the story the player lives through. The goal is straightforward: the helicopter is not a resettable tool but a companion the player must carry through the campaign—its condition, failures, and recovery forming the connective tissue between gameplay and narrative.

Modular Health & Cascading Damage

Each major system—hull, engines, avionics, weapons—has independent health and armor values. Destroyed modules redirect further damage into the hull, creating predictable cascading failures instead of randomness. This makes every hit consequential: losing a component alters flight, targeting, or stability until the player commits to a repair window.

Resource Pressure and Terrain-Linked Logistics

Repair kits, safe landing spots, and regional scarcity introduce operational limits that bind the aircraft’s condition to the structure of the map. Routes are shaped by threat density, recovery points, and consumable availability. Attrition becomes part of progression, and navigation becomes a planning problem layered on top of movement, combat, and AI behavior.

Degradation, Fire, and Persistent Pressure

Modules can degrade or ignite, applying continuous damage or performance penalties until addressed. These events introduce time pressure and force the player to manage evasion, suppression, and emergency stabilization simultaneously. Consequences persist beyond the fight, turning system failure into a long-term tactical consideration.

Layered Pseudo-3D Illusion

Hard Deck uses a layered approach to depth—lighting, parallax, and perspective-aware rigs—to create a 3D sense of space while remaining fully 2D. This direction supports the grounded tone of the game and gives me the level of control I need to shape visuals by hand without losing the efficiency of a 2D pipeline.

Shader Development

Shader work focuses on lightweight, purpose-built solutions. Current tools include lit 2D shaders, UV-based animation for rotors and decals, GPU skinning support, and simple VFX materials. This keeps the visuals efficient and adaptable until it’s time to introduce more advanced stylistic effects later in development.

UI and Technical Styling

The interface is primarily functional but already carries its own style — minimalist, military-inspired, and readable under stress. The green-toned visual language draws from real helicopter avionics, designed to feel integrated into the cockpit rather than layered over the game world.

Future Visual Direction

Once the core systems are in place, the visuals will start carrying more of the narrative weight. Showing accumulated wear of a heli and its crew, with the environment responding subtly to the consequences of earlier encounters. They’ll follow the same logic of this game: pressure builds, conditions worsen, and the world carries the marks of what has happened so far.

Layered Pseudo-3D Illusion

Hard Deck uses a layered approach to depth—lighting, parallax, and perspective-aware rigs—to create a 3D sense of space while remaining fully 2D. This direction supports the grounded tone of the game and gives me the level of control I need to shape visuals by hand without losing the efficiency of a 2D pipeline.

Shader Development

Shader work focuses on lightweight, purpose-built solutions. Current tools include lit 2D shaders, UV-based animation for rotors and decals, GPU skinning support, and simple VFX materials. This keeps the visuals efficient and adaptable until it’s time to introduce more advanced stylistic effects later in development.

UI and Technical Styling

The interface is primarily functional but already carries its own style — minimalist, military-inspired, and readable under stress. The green-toned visual language draws from real helicopter avionics, designed to feel integrated into the cockpit rather than layered over the game world.

Future Visual Direction

Once the core systems are in place, the visuals will start carrying more of the narrative weight. Showing accumulated wear of a heli and its crew, with the environment responding subtly to the consequences of earlier encounters. They’ll follow the same logic of this game: pressure builds, conditions worsen, and the world carries the marks of what has happened so far.

Hard Deck needed a visual style that felt physical and grounded but still workable inside a 2D pipeline. Full 3D asset production would have added complexity that doesn’t seem optimal for a solo project, and classic sprite animation felt too flat for the weight, banking, and mechanical movement the helicopter design depends on. I wanted the world to feel like it occupies real space through consistent cues that support the game’s tone and the player’s sense of immersion.

This led to a layered pseudo-3D approach: a set of visual systems that behave like 3D without leaving the speed and flexibility of 2D. It keeps asset creation manageable, preserves full artistic control, and supports the physical style of movement the game is built around. It also solves a practical problem: the camera occasionally zooms in. A traditional sprite animation stack wouldn’t survive close inspection; a fully rigged pseudo-3D setup does.

Dynamic 2D Lighting & Normal Maps

Hand-painted normal maps define the form of every major asset under directional light. Each surface responds with consistent shading that reinforces volume inside a 2D pipeline, letting the helicopter, terrain, and props read with stable depth cues as the camera and lighting angle shift.

Parallax Layers

Environment objects are built from layered sprites that represent different vertical sections of the asset. Each layer shifts depending on the camera position, creating controlled parallax inside an orthographic camera and giving trees and structures a sense of perspective and vertical depth.

Perspective-Aware Sprite Rigs

Key objects use bone rigs that deform as if they had thickness and orientation. The helicopter is the most developed example: dozens of skinned sprite parts supported by a large bone hierarchy. When it banks, lands, or accelerates, the visual motion resembles real mechanical behavior rather than a flat sprite rotating in place. Buildings and larger environment props use simplified rigging to introduce subtle perspective shifts tied to camera position.

Visual identity within a 2D engine

The layered illusion creates a look uncommon in top-down 2D shooters: assets feel volumetric and heavy while retaining the clarity of traditional 2D rendering.

Realism in service of immersion

The goal is to support the game’s tone — a machine under pressure in a physical world — through consistent visual cues that reinforce weight, space, and mechanical behavior.

A production shaped for solo development

The system keeps asset creation fast and editable. It allows the visuals to grow alongside gameplay without locking the project into high overhead.

IMPACT

Hard Deck needed a visual style that felt physical and grounded but still workable inside a 2D pipeline. Full 3D asset production would have added complexity that doesn’t seem optimal for a solo project, and classic sprite animation felt too flat for the weight, banking, and mechanical movement the helicopter design depends on. I wanted the world to feel like it occupies real space through consistent cues that support the game’s tone and the player’s sense of immersion.

This led to a layered pseudo-3D approach: a set of visual systems that behave like 3D without leaving the speed and flexibility of 2D. It keeps asset creation manageable, preserves full artistic control, and supports the physical style of movement the game is built around. It also solves a practical problem: the camera occasionally zooms in. A traditional sprite animation stack wouldn’t survive close inspection; a fully rigged pseudo-3D setup does.

Dynamic 2D Lighting & Normal Maps

Hand-painted normal maps define the form of every major asset under directional light. Each surface responds with consistent shading that reinforces volume inside a 2D pipeline, letting the helicopter, terrain, and props read with stable depth cues as the camera and lighting angle shift.

Parallax Layers

Environment objects are built from layered sprites that represent different vertical sections of the asset. Each layer shifts depending on the camera position, creating controlled parallax inside an orthographic camera and giving trees and structures a sense of perspective and vertical depth.

Perspective-Aware Sprite Rigs

Key objects use bone rigs that deform as if they had thickness and orientation. The helicopter is the most developed example: dozens of skinned sprite parts supported by a large bone hierarchy. When it banks, lands, or accelerates, the visual motion resembles real mechanical behavior rather than a flat sprite rotating in place. Buildings and larger environment props use simplified rigging to introduce subtle perspective shifts tied to camera position.

Visual identity within a 2D engine

The layered illusion creates a look uncommon in top-down 2D shooters: assets feel volumetric and heavy while retaining the clarity of traditional 2D rendering.

Realism in service of immersion

The goal is to support the game’s tone — a machine under pressure in a physical world — through consistent visual cues that reinforce weight, space, and mechanical behavior.

A production shaped for solo development

The system keeps asset creation fast and editable. It allows the visuals to grow alongside gameplay without locking the project into high overhead.

IMPACT

The shader work in Hard Deck grew from two practical needs: performance and control. The project targets a wide range of PC hardware, including low-end machines, Steam Deck and other handheld platforms, so every rendering feature has to justify its cost. Writing lightweight custom shaders allows me to keep the visuals efficient, predictable, and aligned with the specific demands of the project.

The second motivation is flexibility. Hard Deck uses techniques — UV animation, perspective rigs, GPU-skinned sprites, multi-stage decals — that don’t map neatly onto Unity’s default materials. Custom shaders give me direct control over how these features work together and let the visuals evolve in step with gameplay and art direction rather than being constrained by fixed templates.

3D Lighting in a 2D Rig

The helicopter is built entirely in a 2D pipeline, with roll and pitch simulated through sprite deformation rather than real 3D rotation, so default 2D lighting doesn’t react to the motion. This custom shader procedurally adjusts tangent-space normals based on the helicopter’s roll and pitch values. The result preserves a fully 2D workflow while delivering the surface response of a true 3D asset—using only a single normal-map atlas.

UV-Driven Animation for Rotors and Decals

Some movements and visual effects are easier and more efficient to drive through UV manipulation than through rig animation. For example, the rotor rig handles perspective distortion, while the shader handles RPM through UV rotation. Or decals with a tile-based UV shader, which picks random or logic-based frames from a sprite sheet, allowing you to use one texture for many different variations of a decal.

GPU-Skinned Sprite Support for Rigs

The helicopter and some environment sprites rely on bone rigs. Custom variations of Sprite-Lit ensure they work cleanly with GPU skinning, which matters for both frame time and thermal limits on portable hardware. This also prepares the project for later stages where more skinned objects may appear in the player’s field of view.

Focused and Lightweight VFX Materials

Early VFX use simple unlit shaders or minimal additive/alpha variants to avoid heavy blending costs. Some elements—like tile-sheet fire or smoke animations—use motion-vector-based UV shifts for smoother transitions without GPU-intensive effects. A more stylized VFX pass will come later, once the final art direction and narrative tone solidify.

As the game grows, the shader framework will continue to expand in a controlled way. Early versions focus on stability, clarity, and keeping the pseudo-3D systems coherent, but the structure is already built to support more expressive visual work when the time is right. Directional lighting, height-aware shading, and a more atmospheric VFX layer will come later, once the narrative tone and final art direction settle. What matters now is a foundation that performs well on the hardware I’m targeting and behaves consistently with the systems that define the game. The shaders will evolve, but they’ll stay aligned with the same principles: predictable performance, clean visuals, and enough headroom to support the grounded visual style Hard Deck is built around.

The shader work in Hard Deck grew from two practical needs: performance and control. The project targets a wide range of PC hardware, including low-end machines, Steam Deck and other handheld platforms, so every rendering feature has to justify its cost. Writing lightweight custom shaders allows me to keep the visuals efficient, predictable, and aligned with the specific demands of the project.

The second motivation is flexibility. Hard Deck uses techniques — UV animation, perspective rigs, GPU-skinned sprites, multi-stage decals — that don’t map neatly onto Unity’s default materials. Custom shaders give me direct control over how these features work together and let the visuals evolve in step with gameplay and art direction rather than being constrained by fixed templates.

3D Lighting in a 2D Rig

The helicopter is built entirely in a 2D pipeline, with roll and pitch simulated through sprite deformation rather than real 3D rotation, so default 2D lighting doesn’t react to the motion. This custom shader procedurally adjusts tangent-space normals based on the helicopter’s roll and pitch values. The result preserves a fully 2D workflow while delivering the surface response of a true 3D asset—using only a single normal-map atlas.

UV-Driven Animation for Rotors and Decals

Some movements and visual effects are easier and more efficient to drive through UV manipulation than through rig animation. For example, the rotor rig handles perspective distortion, while the shader handles RPM through UV rotation. Or decals with a tile-based UV shader, which picks random or logic-based frames from a sprite sheet, allowing you to use one texture for many different variations of a decal.

GPU-Skinned Sprite Support for Rigs

The helicopter and some environment sprites rely on bone rigs. Custom variations of Sprite-Lit ensure they work cleanly with GPU skinning, which matters for both frame time and thermal limits on portable hardware. This also prepares the project for later stages where more skinned objects may appear in the player’s field of view.

Focused and Lightweight VFX Materials

Early VFX use simple unlit shaders or minimal additive/alpha variants to avoid heavy blending costs. Some elements—like tile-sheet fire or smoke animations—use motion-vector-based UV shifts for smoother transitions without GPU-intensive effects. A more stylized VFX pass will come later, once the final art direction and narrative tone solidify.

As the game grows, the shader framework will continue to expand in a controlled way. Early versions focus on stability, clarity, and keeping the pseudo-3D systems coherent, but the structure is already built to support more expressive visual work when the time is right. Directional lighting, height-aware shading, and a more atmospheric VFX layer will come later, once the narrative tone and final art direction settle. What matters now is a foundation that performs well on the hardware I’m targeting and behaves consistently with the systems that define the game. The shaders will evolve, but they’ll stay aligned with the same principles: predictable performance, clean visuals, and enough headroom to support the grounded visual style Hard Deck is built around.

At this stage of development, the UI in Hard Deck is primarily functional. The priority has been systems, movement, weapons, and the core loop, so the interface had to stay flexible rather than fully styled. Even so, the current prototype already carries the direction I want to follow: a clean, utilitarian HUD inspired by real helicopter avionics — specifically the display language of the AH-64’s TADS/PNVS and its various symbology layers.

Motivation

The main motivation behind the UI is restraint. A helicopter crew doesn’t have floating HP bars, glowing weak spots, or arcade indicators — their situational awareness comes from instruments, training, and the environment. I want to keep that mindset inside the game’s logic.

This means:

No enemy health bars

No hit markers or flashing silhouettes

No UI warnings for projectiles or incoming attacks

No “gamey” overlays that sit outside the fiction

Instead, feedback comes from worldspace cues — smoke, sparks, heat distortion, sound, and visible damage. Even simple things like muzzle flashes or dust trails from enemy missiles do more for immersion than UI popups ever would.

Planned Evolution

As more narrative and gameplay systems take shape, the UI will gradually shift toward a context-aware instrument panel:

Modes that adapt depending on camera zoom, weapon system, or flight regime.

Subtle animations that reflect pressure or damage rather than announce it.

Cleaner symbology consistent with real-world references.

Dynamic readouts that only draw attention when necessary.

It is meant to be simple but fully integrated. The UI should feel like part of the helicopter—something the crew actually relies on—not an abstract gameplay layer.

And as the world becomes more visually reactive (heat, damage wear, environmental pressure), the UI can afford to reveal even less. Ideally, by late development, the interface will behave more like a tool and less like an overlay. It will communicate what needs to be known, while the rest of the experience is handled visually and sonically by the environment and the helicopter itself.

The intention is not to imitate military UI for authenticity alone. What I need is a visual language that communicates only what is mechanically necessary, and does it in a way that feels embedded into the cockpit, not pasted on top of the game. The green monochrome look, timed indicators, numeric readouts, and sparse framing marks support that tone without overwhelming the player or breaking immersion.

At this stage of development, the UI in Hard Deck is primarily functional. The priority has been systems, movement, weapons, and the core loop, so the interface had to stay flexible rather than fully styled. Even so, the current prototype already carries the direction I want to follow: a clean, utilitarian HUD inspired by real helicopter avionics — specifically the display language of the AH-64’s TADS/PNVS and its various symbology layers.

Motivation

The main motivation behind the UI is restraint. A helicopter crew doesn’t have floating HP bars, glowing weak spots, or arcade indicators — their situational awareness comes from instruments, training, and the environment. I want to keep that mindset inside the game’s logic.

This means:

No enemy health bars

No hit markers or flashing silhouettes

No UI warnings for projectiles or incoming attacks

No “gamey” overlays that sit outside the fiction

Instead, feedback comes from worldspace cues — smoke, sparks, heat distortion, sound, and visible damage. Even simple things like muzzle flashes or dust trails from enemy missiles do more for immersion than UI popups ever would.

Planned Evolution

As more narrative and gameplay systems take shape, the UI will gradually shift toward a context-aware instrument panel:

Modes that adapt depending on camera zoom, weapon system, or flight regime.

Subtle animations that reflect pressure or damage rather than announce it.

Cleaner symbology consistent with real-world references.

Dynamic readouts that only draw attention when necessary.

It is meant to be simple but fully integrated. The UI should feel like part of the helicopter—something the crew actually relies on—not an abstract gameplay layer.

And as the world becomes more visually reactive (heat, damage wear, environmental pressure), the UI can afford to reveal even less. Ideally, by late development, the interface will behave more like a tool and less like an overlay. It will communicate what needs to be known, while the rest of the experience is handled visually and sonically by the environment and the helicopter itself.

The intention is not to imitate military UI for authenticity alone. What I need is a visual language that communicates only what is mechanically necessary, and does it in a way that feels embedded into the cockpit, not pasted on top of the game. The green monochrome look, timed indicators, numeric readouts, and sparse framing marks support that tone without overwhelming the player or breaking immersion.

Hard Deck has a defined visual direction. The groundwork is in place—the height system, pseudo-3D rigs, lighting pipeline, shader framework, VFX, and UI logic all establish how the game reads, moves, and reacts. These decisions define the aesthetic even more reliably at this stage than textures or color grading ever could. They give the project its structure, its constraints, and its internal logic.

The next stages will be more about refining a style that already exists in technical form. As level design, narrative pacing, and late-game mechanics settle into their final shape, the visuals will evolve to support them more directly.

This includes tighter control over palette and atmosphere, a more deliberate approach to material wear, and environmental changes that echo the state of the helicopter and its crew. These steps will be a natural continuation of a pipeline built to grow in complexity without losing its vision.

The long-term goal is a world where the visuals reinforce the tone as effectively as the systems do: grounded, exhausted, and shaped by the consequences. Hard Deck’s final look will grow out of the logic already embedded in its mechanics, ensuring that style never competes with function and every visual choice elevates the experience rather than decorating it.

Hard Deck has a defined visual direction. The groundwork is in place—the height system, pseudo-3D rigs, lighting pipeline, shader framework, VFX, and UI logic all establish how the game reads, moves, and reacts. These decisions define the aesthetic even more reliably at this stage than textures or color grading ever could. They give the project its structure, its constraints, and its internal logic.

The next stages will be more about refining a style that already exists in technical form. As level design, narrative pacing, and late-game mechanics settle into their final shape, the visuals will evolve to support them more directly.

This includes tighter control over palette and atmosphere, a more deliberate approach to material wear, and environmental changes that echo the state of the helicopter and its crew. These steps will be a natural continuation of a pipeline built to grow in complexity without losing its vision.

The long-term goal is a world where the visuals reinforce the tone as effectively as the systems do: grounded, exhausted, and shaped by the consequences. Hard Deck’s final look will grow out of the logic already embedded in its mechanics, ensuring that style never competes with function and every visual choice elevates the experience rather than decorating it.

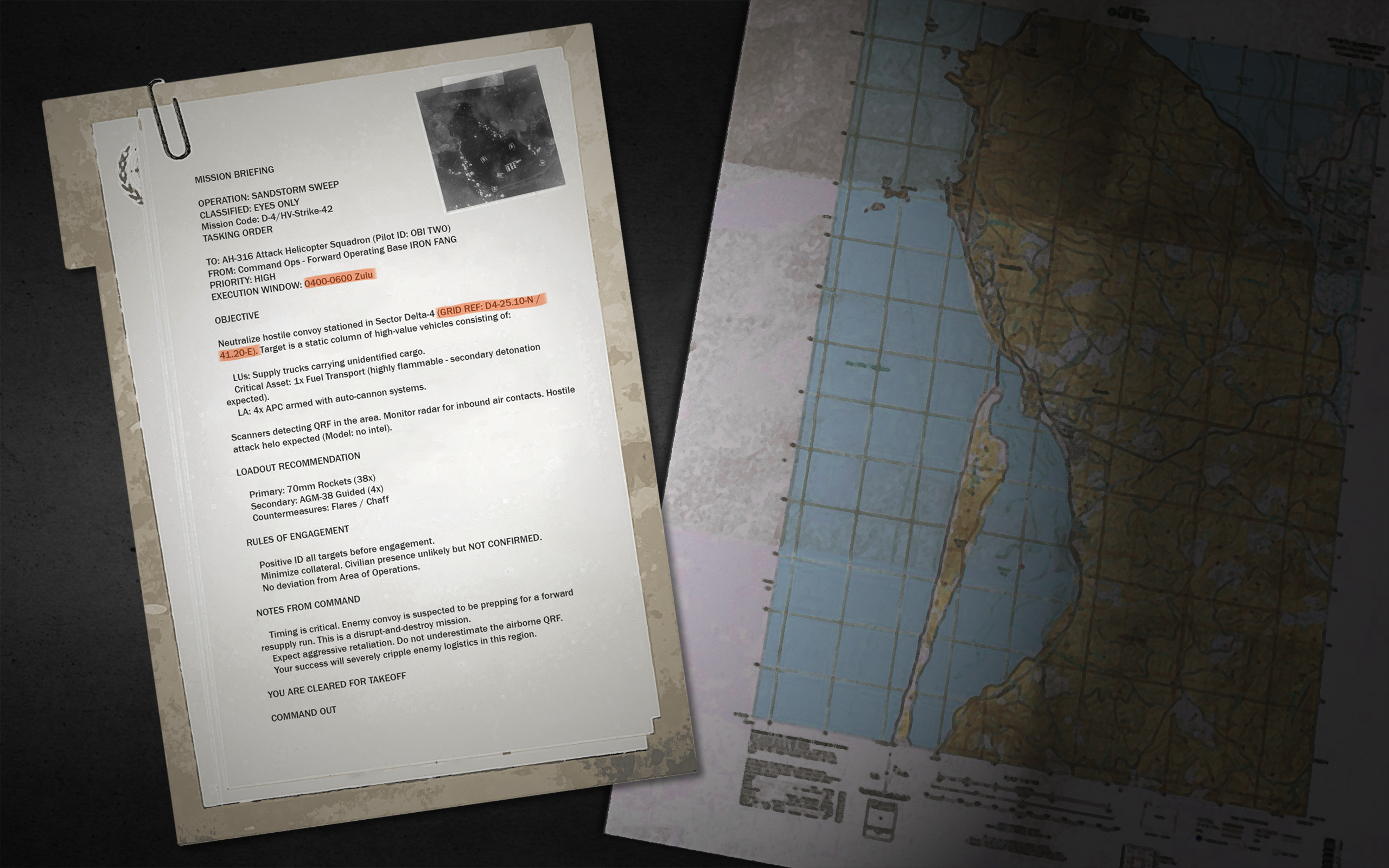

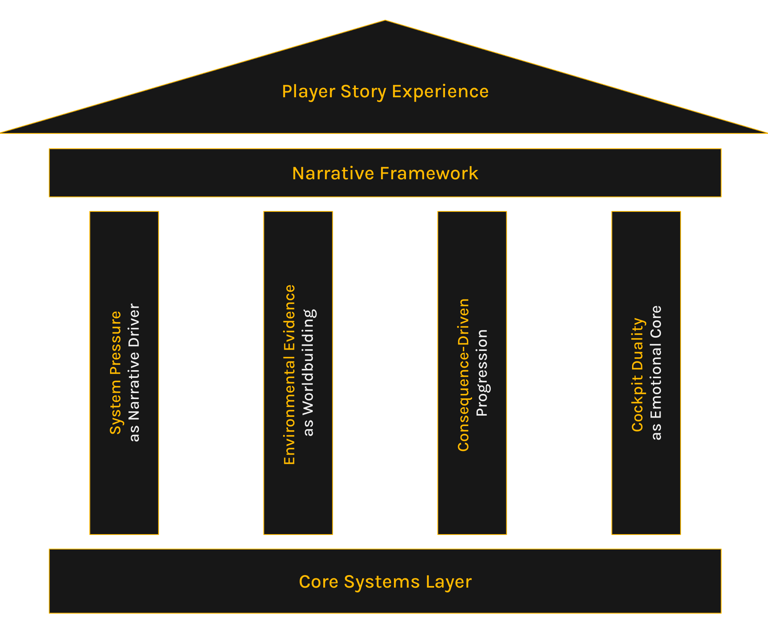

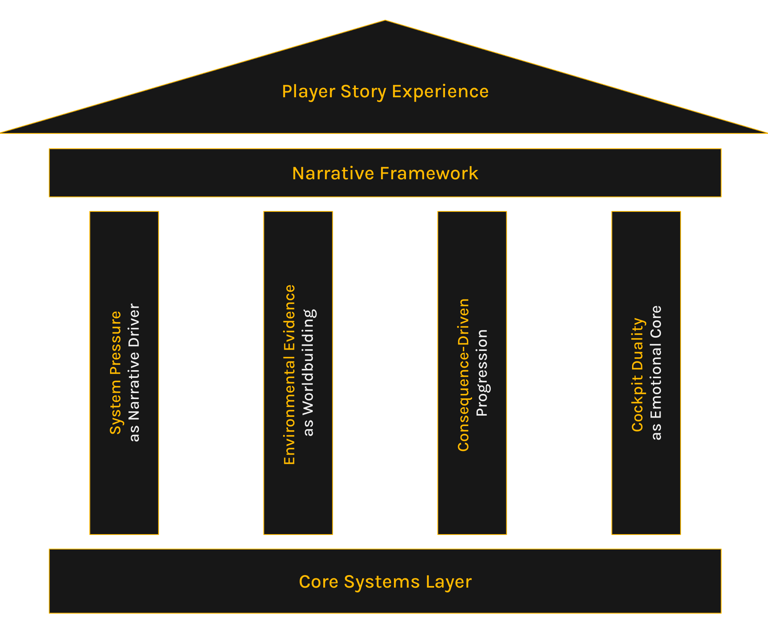

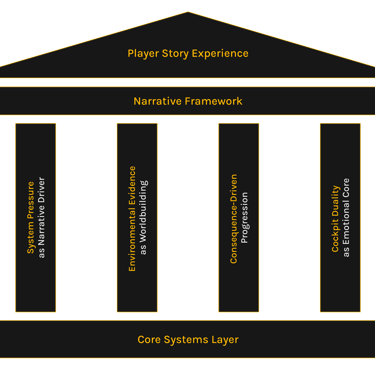

Narrative Intent Rooted in Physical Constraints

Hard Deck’s story grows directly from the pressure of operating a damaged helicopter in hostile territory. The narrative pillars—operational weight, silence, consequence, and environmental evidence—establish a grounded tone shaped by the project’s technical and production limits.

Character Dynamics Integrated Into Game Logic

Two trained pilots share the same aircraft but carry different internal motivations. Their dynamics are shown through work under stress, responses to consequences, and operational disagreements—and their backgrounds justify core systems such as low-altitude maneuvering and field repairs.

Structure Delivered Through Systems

The multi-act framework is expressed through zone access, encounter density, resource scarcity, and sensor-driven discovery. Story progression emerges from shifts in operational workload rather than cinematic transitions or dialog-heavy sequences.

Environmental Signals as Narrative Tools

The world is built as a landscape of evidence: wrecks, fortified routes, civilian ruins, and faction positions that reveal the conflict’s shape. Systemic beats—engine failures, exposure during repairs, scans, and encounters—transform gameplay conditions into consistent narrative moments.

Narrative Intent Rooted in Physical Constraints

Hard Deck’s story grows directly from the pressure of operating a damaged helicopter in hostile territory. The narrative pillars—operational weight, silence, consequence, and environmental evidence—establish a grounded tone shaped by the project’s technical and production limits.

Character Dynamics Integrated Into Game Logic

Two trained pilots share the same aircraft but carry different internal motivations. Their dynamics are shown through work under stress, responses to consequences, and operational disagreements—and their backgrounds justify core systems such as low-altitude maneuvering and field repairs.

Structure Delivered Through Systems

The multi-act framework is expressed through zone access, encounter density, resource scarcity, and sensor-driven discovery. Story progression emerges from shifts in operational workload rather than cinematic transitions or dialog-heavy sequences.

Environmental Signals as Narrative Tools

The world is built as a landscape of evidence: wrecks, fortified routes, civilian ruins, and faction positions that reveal the conflict’s shape. Systemic beats—engine failures, exposure during repairs, scans, and encounters—transform gameplay conditions into consistent narrative moments.

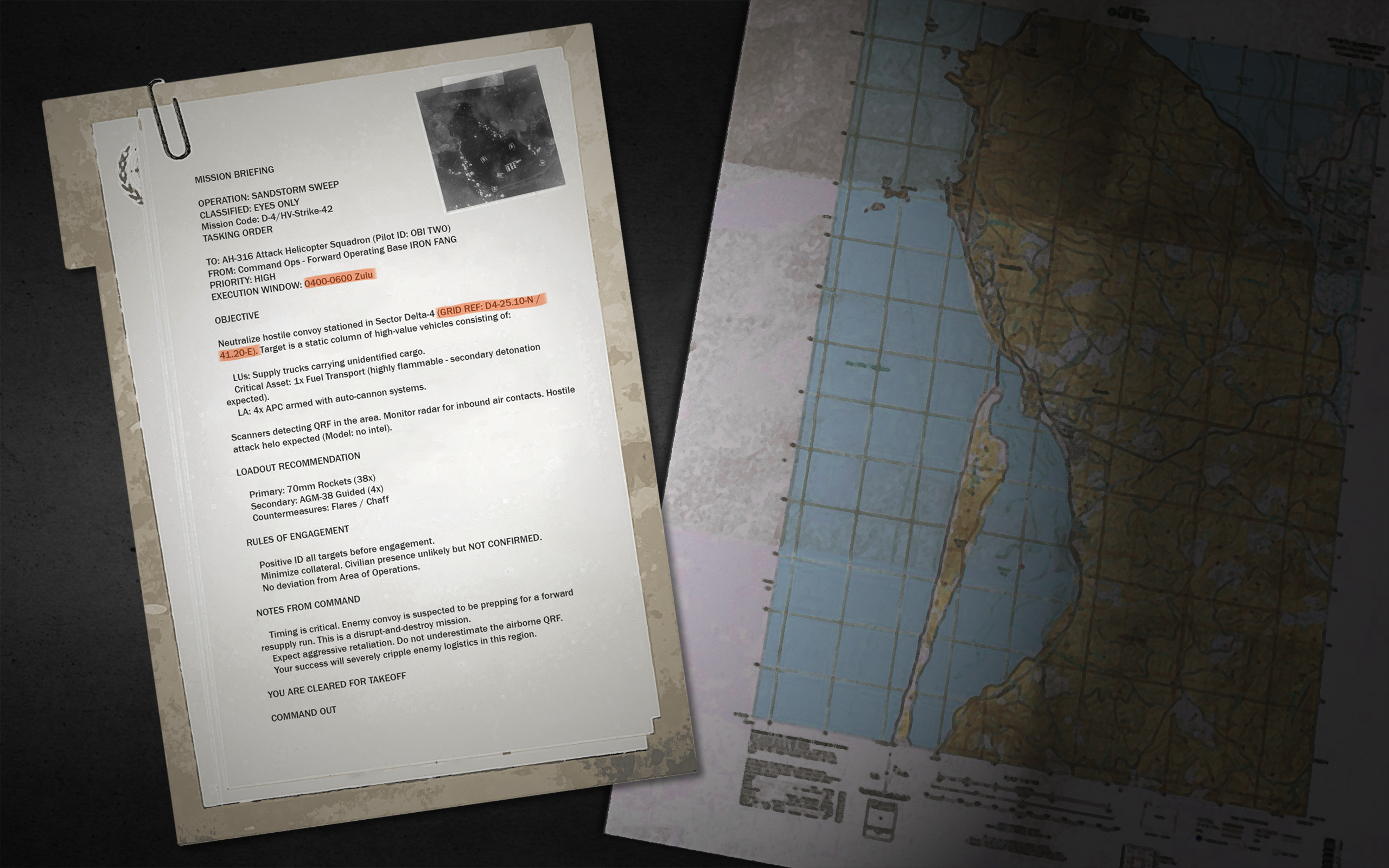

Hard Deck began with a specific story idea rather than a set of mechanics. The game was shaped around the emotional and operational pressure placed on two pilots navigating hostile territory with a damaged aircraft and incomplete information. Because the project is a solo effort with a top-down 2D pipeline, the narrative had to be expressed through systems the player touches constantly—altitude, visibility, module damage, field repairs, and the boundaries of the map. These constraints defined the entire narrative approach.

Story Built on Physical Pressure

The narrative relies on workload, risk, and scarcity instead of scripted drama. Height management, fuel limits, unstable modules, and narrow engagement windows create emotional weight through mechanical reality. This ensures that narrative beats emerge when the helicopter is under genuine stress, giving each moment a sense of authenticity that scripted events can’t replicate. The story stays grounded because the player experiences the same constraints and limitations that shape the crew’s decisions.

Constraints as Creative Boundaries

A 2D top-down perspective and limited VO steer the story toward sparse, professional dialogue and system-driven delivery. The lack of cinematic staging forces a grounded tone where events matter because of their impact on the aircraft. These limitations encourage a narrative approach built on inference, spatial reading, and controlled perspective-based cut scenes that stay faithful to the game’s visual identity. Instead of compensating with heavy exposition, the story uses these constraints to stay focused and deliberate.

The Crew as Story Drivers and System Justification

The narrative centers on a two-person crew whose roles serve both emotional progression and mechanical clarity. Their contrasting backgrounds and personalities provide natural tension, while their training explains the player’s capabilities—field repairs, low-altitude flying, risk evaluation—without breaking immersion. This alignment allows character identity to justify abstractions such as repair stages or aggressive maneuvering, making the gameplay feel diegetic rather than gamified. The crew becomes the lens through which the player interprets the world and understands the helicopter’s behavior.

Narrative Principles Aligned to Gameplay

The core pillars—operational pressure, silence, environmental evidence, consequence-driven progression, and mutual dependence—act as constraints that guide every narrative decision. They ensure that tone remains consistent across mechanics, level layout, AI behavior, and pacing. These principles prevent tonal drift by anchoring the story to the helicopter’s physical reality, creating a unified experience where events, reactions, and player actions feel coherently connected. The narrative remains stable because every new system must fit inside the same thematic and mechanical boundaries.

Hard Deck began with a specific story idea rather than a set of mechanics. The game was shaped around the emotional and operational pressure placed on two pilots navigating hostile territory with a damaged aircraft and incomplete information. Because the project is a solo effort with a top-down 2D pipeline, the narrative had to be expressed through systems the player touches constantly—altitude, visibility, module damage, field repairs, and the boundaries of the map. These constraints defined the entire narrative approach.

Story Built on Physical Pressure